Unlock the Power of Model Context Protocol: A Deep Dive into the Future of AI Agents

Introduction

The landscape of Artificial Intelligence is rapidly evolving, with a constant stream of new protocols and techniques emerging. One recent development gaining significant traction among developers is the Model Context Protocol (MCP). This protocol promises to revolutionize how Large Language Models (LLMs) interact with external applications and data sources, paving the way for more powerful and versatile AI agents. This article will explore what MCP is, how it differs from traditional function calling, and how you can start implementing it in your own projects.

What is the Model Context Protocol?

MCP is an open-source protocol designed to standardize the connection between LLMs and external tools. It’s essentially a bridge that enables AI models to seamlessly integrate with applications, APIs, and data sources, allowing them to perform actions and access information beyond their core training data. This opens up a world of possibilities for creating truly intelligent and adaptable agents.

MCP vs. Traditional Function Calling

Traditional function calling, while effective, can be cumbersome and inflexible. It typically requires developers to create specific code for *each* API or tool they want to integrate, effectively creating a custom interface for every connection. This can be time-consuming and challenging to maintain as the number of integrations grows.

MCP, on the other hand, takes a more abstract and standardized approach. Instead of directly calling functions, the LLM interacts with the protocol, which then handles the communication with the external tools. This abstraction simplifies integration and allows for greater flexibility.

Key differences:

Abstraction: MCP provides a higher level of abstraction, reducing the complexity of integration.

Standardization: MCP uses a standardized communication format, making it easier to connect to various tools.

Scalability: The modular architecture of MCP makes it easier to scale and manage a large number of integrations.

How does the MCP Architecture Work?

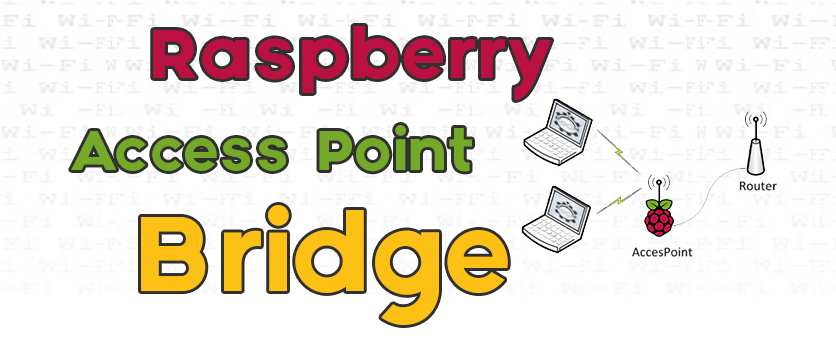

The MCP architecture centers around three core components: Servers, Clients, and Tools.

Servers: These are the core components that expose functionalities to the LLM. Each server houses a set of functions and resources.

Resources: These represent the data or files the server can access.

Tools: These are the specific functions the server provides, allowing the LLM to perform actions.

The LLM communicates with the server through the MCP protocol, requesting specific tools to be executed. The server then processes the request and returns the results.

A Practical Example: Building a Weather Server

To illustrate how MCP works in practice, let’s consider a simple example: building a weather server. This server would expose two key tools:

`get_alerts`: Retrieves weather alerts for a specific US state.

`get_forecast`: Provides a weather forecast for a given location (latitude and longitude).

The server would need access to a weather API or database to retrieve the relevant data. The LLM could then request a forecast for a specific city by simply calling the `get_forecast` tool with the appropriate coordinates.

Implementing MCP: A Step-by-Step Guide

Implementing MCP typically involves the following steps:

1. Set up the Development Environment: This typically involves installing necessary libraries and tools, such as Python and the MCP server package.

2. Create the Server: Define the functionalities (tools) and resources that your server will expose.

3. Implement the Tools: Write the code that performs the desired actions for each tool.

4. Define the Server Interface: Create a standardized interface for the LLM to interact with the server.

5. Integrate with a Client: Connect the server to an MCP client, such as a desktop application, to enable communication with the LLM.

The Future of AI Agents

The Model Context Protocol is poised to play a significant role in the future of AI agents. By simplifying integration and promoting standardization, MCP will empower developers to create more powerful, versatile, and intelligent agents. As the ecosystem around MCP continues to grow, we can expect to see even more innovative applications emerge, pushing the boundaries of what’s possible with AI.

Resources for Further Exploration

Antropic’s Model Context Protocol Documentation: [https://github.com/handler-ai/model-context-protocol](https://github.com/handler-ai/model-context-protocol)

OpenAI Blog – Exploring MCP: (Search for “Model Context Protocol” on the OpenAI blog for updates)

Handler AI (MCP creators): [https://www.handler.ai/](https://www.handler.ai/)